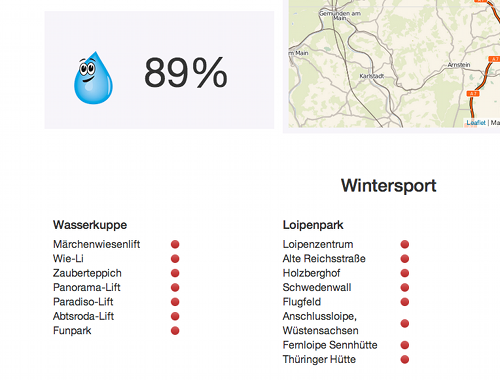

After my last post I was actually contacted by 2 people asking for more current information on the website that I had built. In particular, they were interested in the conditions of the winter sports facilities that we have in the region (ski-lifts, cross-country trails).

I looked around, and the only information available was on the web sites of the facility operators. No central place where all the data was collected and made available. Since I had never done screen scraping before, I wasn’t really sure what to do.

Reading up on Stackoverflow and other resources I learned that I had to read an HTML site, turn it into a DOM object and find the right places with the right information for the facilities (closed, open, good conditions, red.gif, green.gif). Looking around I found a nice helper library that served me very well with my first version: For every webpage to get data from I wrote a little PHP script to capture the data.

This worked well for the first facility, where the website was quite responsive. The second one was making more trouble with regard to response times. Now I had a 6 sec wait before my page was displaying. That wasn’t really acceptable, because I have still 2 more places to scrape.

So I took the Saturday afternoon to make it work asynchronously. It turned out to be quite easy: I continued to use my PHP scripts, but converted them into functions that could be called with AJAX calls, returning JSON data. From there it took only a couple more minutes, and I was finished. Displaying the site itself is really fast again, and since the scraped information doesn’t show up in the visible part of the browser things can take a little longer. But even scrolling down right away is fun: I enjoy watching the data show up!